Exploring Graph Representation Learning: Techniques and Applications

Written on

Chapter 1: Introduction to Graph Representation Learning

Graph representation learning involves mapping nodes or entire graphs into a low-dimensional vector space.

Prerequisite Knowledge

Before diving into graph representational learning, it's essential to understand basic graph concepts and their applications. Graphs serve as fundamental non-linear data structures that model complex systems through a set of objects, referred to as nodes or vertices, and their interconnections, known as edges or links.

Why Graph Representation Learning is Important

Traditional deep learning methods excel in structured data like images, text, and tables. However, the unique complexity of graphs presents challenges. The core issue lies in efficiently capturing feature representations that are agnostic to specific tasks while incorporating the inherent structure of the graph.

Take, for instance, item recommendations based on link predictions; it's crucial to encode the relationships between items and users effectively. Graphs, being irregular and dynamic, can vary in node sizes and configurations, complicating this process.

Understanding Graph Isomorphism

Graphs can be isomorphic, meaning they maintain the same number of nodes and connectivity patterns, even if their shapes differ. This characteristic highlights the lack of inherent ordering in graphs, making them complex structures that can evolve dynamically by adding or removing nodes and edges.

Chapter 2: The Fundamentals of Graph Representation Learning

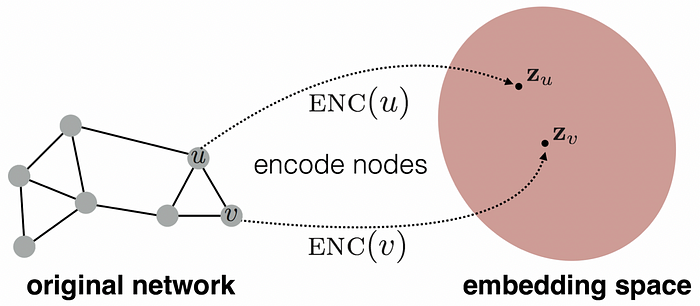

Graph representation learning tackles these complexities by embedding nodes or entire graphs into a low-dimensional space where the geometric relationships reflect the original graph's structure. This process transforms the task of understanding graph structures into a machine learning problem rather than a mere preprocessing step.

#### Key Considerations

To effectively represent graphs, several aspects must be addressed:

- Preserving structural properties of the graph

- Measuring node similarity in both original and embedded spaces

Node Embeddings

The primary aim of node embedding is to create an encoder that maps nodes into a dense, low-dimensional space, such that similarities in this space correspond to those in the original graph.

The optimization of these embeddings allows for real-time comparisons using efficient parallel computing techniques. To achieve effective node embeddings, the following steps are crucial:

- Define the Encoder (ENC): This maps nodes to embeddings, with a simple method being an embedding lookup that assigns unique vectors to each node.

- Establish a Node Similarity Function: This defines how relationships in the embedding space mirror those in the original graph. Various factors can characterize node similarity, including:

- Presence of edges

- Overlapping neighborhoods

- Reachability via k-hops

- Random walks

- Similar attributes

- Optimize Encoder Parameters: The goal here is to ensure that the similarity between nodes in the original graph closely approximates the dot product of their embeddings.

Two widely used algorithms for node embeddings based on random walks are DeepWalk and Node2Vec.

#### Random Walks and Their Significance

A random walk is an iterative selection of nodes where the walker moves through the graph, starting from a node and progressing to adjacent nodes based on a random strategy.

For example, playing a geography game like GeoGuessr requires recognizing your surroundings after being randomly placed in a location. Similarly, in graph theory, random walks allow for the exploration of neighborhoods without needing to consider all pairs of nodes.

Random walks are powerful because they capture local and global neighborhood information without examining every node pair.

Representation Learning on Graphs and Networks - Dr. Petar Veličković - YouTube

This video provides insights into the fundamentals of representation learning on graphs and networks, emphasizing its significance in modern machine learning.

Node2Vec: A Comprehensive Approach

Node2Vec enhances random walks by using flexible, biased strategies that balance local and global perspectives through breadth-first and depth-first search methods.

- Breadth-First Search (BFS): This strategy uncovers the shortest paths and provides a localized view of the graph.

- Depth-First Search (DFS): This approach traverses further edges, offering a broader view of the network.

DeepWalk complements this methodology by sampling from neighbors to generate node representations, using a SkipGram algorithm to update these representations.

Chapter 3: Graph Embedding Techniques

Graph embedding seeks to represent nodes and edges in a lower-dimensional vector space. This technique supports various applications, including subgraph classification.

Two primary types of graph filtering methods include:

- Spectral Graph Filtering: This is based on the eigen decomposition of the graph's Laplacian matrix.

- Spatial Graph Filtering: This approach aggregates local neighborhood information and is favored due to its computational efficiency and localized property.

Graph Neural Networks (GNN)

The GNN framework relies on an iterative process of aggregation and updating, where nodes collect information from their neighbors, progressively enhancing their embeddings.

#### Graph Convolutional Networks (GCN)

GCNs generalize convolution operations to non-Euclidean data, enabling the stacking of multiple convolutional layers while applying a point-wise non-linearity.

#### GraphSAGE

GraphSAGE employs node attributes to generate representations for previously unseen data efficiently. This framework allows the model to adapt to new nodes or graphs that share the same attribute schema as the training data.

#### Graph Attention Networks (GAT)

GATs utilize self-attention mechanisms to compute node representations by focusing on neighboring nodes. This model is efficient and allows for varying importance levels across different nodes.

Graph Representation Learning for Algorithmic Reasoning - YouTube

This video delves into the application of graph representation learning for algorithmic reasoning, showcasing its potential to enhance machine learning methodologies.

Conclusion

Node and graph embeddings facilitate the representation of complex structures into low-dimensional spaces while maintaining critical topological and content information. This capability is essential for executing various graph analytics tasks, such as classification, clustering, and recommendation.

References

- Graph neural networks: A review of methods and applications

- Social Network Analysis: From Graph Theory to Applications with Python

- Understanding Graph Embedding Methods and Their Applications

- A Comprehensive Survey on Graph Neural Networks

- Bridging the Gap Between Spectral and Spatial Domains in Graph Neural Networks

- Representation Learning on Graphs: Methods and Applications

- Inductive Representation Learning on Large Graphs

- Graph Attention Networks