How an Hours-Long Outage Exposed Reddit's Code Knowledge Gap

Written on

Chapter 1: The Outage Incident

Reddit recently experienced a significant outage that lasted several hours, showcasing how even well-established platforms can falter. The development team released an in-depth analysis of the March 14 incident, revealing that the disruption stemmed not just from technical faults, but also from a notable lack of familiarity with their own code and systems.

Section 1.1: Triggering Events

According to the analysis, an upgrade from Kubernetes version 1.23 to 1.24 initiated an unforeseen bug, despite extensive prior testing. After enduring several hours of downtime, the team opted to revert to the previous version and restore a backup to resolve the issue. Although this ultimately worked, the precise cause of the failure remains elusive.

Subsection 1.1.1: The Search for the Cause

The team likened their quest to identify the issue within the logs to searching for a needle in a haystack. Eventually, they discovered that the mesh network linking the cluster nodes had gone offline due to all routes being discarded. To manage this infrastructure, Reddit utilizes route reflectors to avoid a full mesh setup. A configuration oversight related to this was the root cause of the outage.

Section 1.2: Implications for Large Platforms

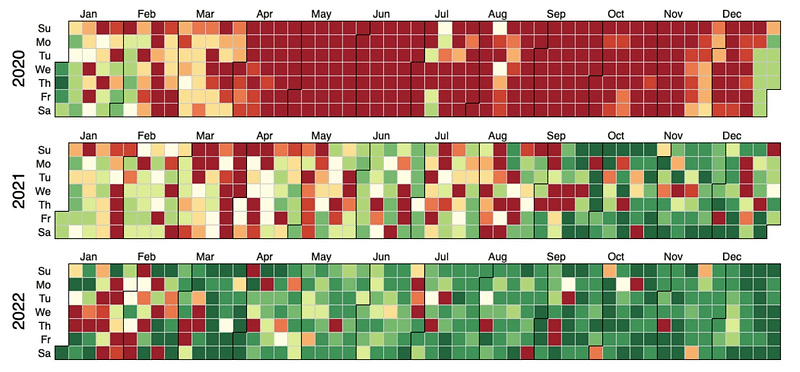

Despite presenting statistics indicating improved stability over time, the ramifications of being offline for an entire day can be severe for large sub-communities. It remains unclear whether the issues arose from software engineering or infrastructure failures. However, it is evident that versioning and code documentation are critical areas that need attention, especially as infrastructure becomes increasingly code-driven.

Chapter 2: Lessons Learned

The first video titled "The Bug that Broke Reddit for 314 Minutes" delves into the specifics of this outage, providing insights into the complexities and challenges faced by Reddit's team during this crisis.

Such incidents should serve as a wake-up call for organizations with limited expertise and resources. Historically established and mid-sized firms often find themselves inadequately prepared for such challenges, emphasizing the necessity of prioritizing know-how in managing technical infrastructure.

The second video, "Ad Blockers Violate YouTube's Terms of Service - Easy Fix," discusses the implications of technology management and compliance, further reinforcing the need for robust knowledge and documentation practices in software development environments.