Addressing Cloud Development Challenges: Ensuring Reliability

Written on

Chapter 1: The Current State of Cloud Development

The cloud landscape has become increasingly challenging for developers, contributing significantly to production failures.

Photo by Sigmund on Unsplash.

When I bring up the topic of configuration management, I can almost feel the collective shudder from seasoned developers. The number of failed releases due to seemingly minor configuration changes is staggering. Even Google has documented several incidents caused by configuration mistakes, including a notable case where they had to deny-list URLs, which led to users seeing warnings.

In theory, modern deployment strategies should catch these errors before they reach production. So why do we still encounter these issues? The uncomfortable truth is that the tendency to overlook proper instantiation practices is a major factor. It’s high time we confront these challenges head-on.

Section 1.1: Understanding Lazy Instantiation

So, what exactly is lazy instantiation, and how does it relate to production failures linked to configuration changes? The simplest way to clarify this concept is through an illustrative example.

Consider a snippet of Go code that interacts with an external MySQL database:

// Example code snippet

If the connection string (connStr) is incorrect, does this code cause a panic? Surprisingly, the answer is no. Although it seems like a connection to the database is being established, what actually happens is that a database pool object is lazily instantiated, delaying the connection until the first request is made.

This behavior can vary depending on the database driver. The documentation for sql.Open suggests validating the data source:

“To confirm that the data source name is valid, use the Ping method.”

Suppose you have an API server that passes this database object to request handlers. In such a case, the server may start up and appear healthy, but every incoming request could fail due to a misconfiguration.

In essence, lazy instantiation means waiting for the first operation before performing tasks like establishing a database connection. This creates a significant issue: we cannot determine if our service is malfunctioning until it actually fails. The key takeaway here is that services ought to validate their configuration settings at startup. Failing to do so could lead to missed error detection because of negligence or ignorance.

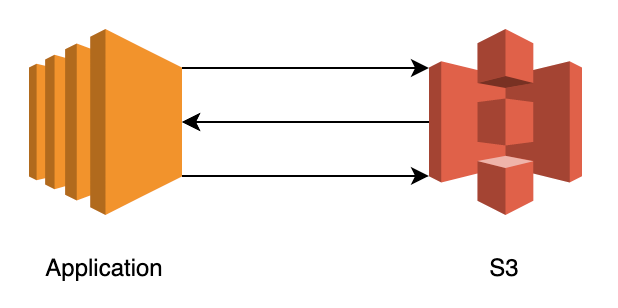

Section 1.2: The Challenge of S3 Bucket Policies

Now, what happens when validating configurations is more complex than simply running a Ping command? Let's examine the challenges associated with verifying S3 bucket policies. For those who may not know, S3 is Amazon's object storage service, which simplifies cloud file storage but requires proper setup and configuration.

Typically, when working with S3, we use credentials that possess various permissions—such as ListBucket, GetObject, PutObject, and DeleteObject. If our application relies on a credential that needs to perform these actions, how can we ensure that the credential is valid at startup?

To confirm the validity of a credential, we would ideally attempt to get, put, and delete an object. Unfortunately, S3 lacks a direct method for testing credentials. Thus, validation must be creatively approached, resembling this example:

// Example validation code

The primary lesson here is that when developing software for developers, we should strive to meet the comprehensive needs of our users. A robust API with exceptional durability is fantastic, but we must consider how it integrates into broader practices like continuous integration and delivery.

Chapter 2: Ensuring Configuration Validity

The notion that this issue is limited to Amazon or other SaaS/PaaS providers is a misconception. Let’s examine one more case that illustrates this point.

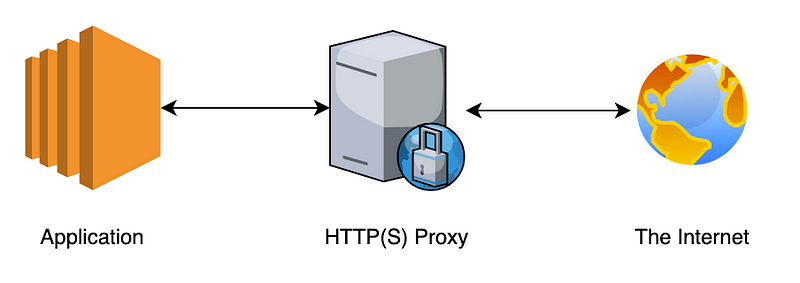

Section 2.1: Proxy Configuration Challenges

Recently, I encountered a situation involving an outbound HTTP(S) proxy. In such a setup, your application sends outbound HTTP requests to a proxy, which in turn communicates with the internet. How can you ensure that the proxy is properly configured without making an outbound request?

One might consider making a HEAD request to the proxy with the correct headers, and if we receive a 200 OK response, everything should be fine. However, without specific knowledge of the proxy’s implementation, this approach may not be reliable. We could receive a 200 OK response even with incorrect credentials.

For instance, using the hprox tool, we could issue the following commands:

hprox -p 1122 -a userpass.txt &

curl -I localhost:1122

This command may return a 200 OK response even with bad credentials. To accurately verify the proxy configuration, we need to implement a check that adheres to standards, as outlined in RFC 7231.

Ultimately, we can develop validation code to ensure that any misconfigurations are caught during startup:

// Validation code for proxy configuration

The time has come to prioritize configuration validation right from the beginning. A pragmatic approach would be to enhance contract testing practices.

“Contract tests ensure that inter-application communications align with a shared understanding documented in a contract.” — Pact documentation

One of the simplest forms of contract testing involves using Connect or Ping methods. By fostering a culture of comprehensive contract testing within our applications, we can address these issues proactively.

Conclusion: Moving Towards Better Practices

Until every service offers an API designed for validating configuration settings and ensuring runtime usability, we must devise our own strategies to confirm correctness. To minimize the frequency of application crashes post-deployment, we need to:

- Ensure applications validate all configuration options at startup.

- Develop software that aligns with full CI/CD practices.

- Incorporate contract testing as a standard procedure to identify potential gaps within our services.

Chapter 3: Enhancing Developer Experience with Tools

The first video, "Developer Friendly Kubernetes: No More CLI or YAML!" discusses how to streamline Kubernetes usage for developers, making it more approachable and less error-prone.

The second video, "Xcode Cloud Stays Free Forever? No More Excuses to Ignore CI | @SwiftBird," explores the benefits of Xcode Cloud and how it encourages developers to embrace continuous integration practices without financial concerns.