Revolutionizing Online Shopping: Google's AI-Powered Virtual Try-On

Written on

Chapter 1: Introduction to Google's Virtual Try-On Technology

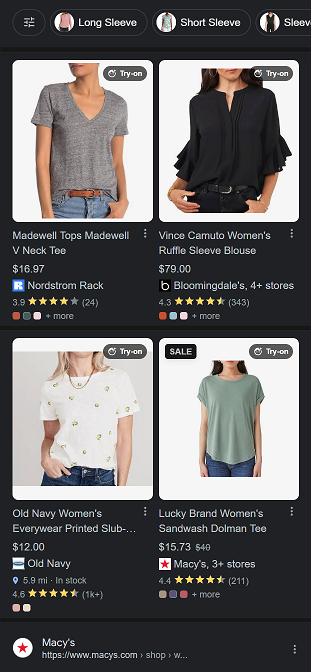

Google has introduced a cutting-edge virtual try-on capability for Google Shopping. For instance, when searching for women's tops, users can easily find a 4-pack layout to visualize different styles.

Historically, virtual try-on technologies faced challenges in accurately depicting clothing details and adapting to various poses. Google's advanced AI model has successfully tackled these issues, allowing users to virtually try on outfits with enhanced realism and adaptability.

Section 1.1: The Evolution of Virtual Try-On Models

Previously, a major hurdle for virtual try-on systems was achieving a balance between maintaining clothing details and allowing for garment deformations while accommodating various body shapes and poses. This was especially crucial for items with intricate designs, like pockets or unique sleeves.

The traditional methods often split the try-on process into two distinct phases: the warping model and the blending model. While some models prioritized detail retention, they struggled with pose adjustments. Others could adjust poses but compromised on detail accuracy.

However, the integration of two UNet models, known as TryOnDiffusion, has effectively overcome these limitations. This new model combines implicit warping and blending in one cohesive process, enabling it to preserve clothing details while adapting to significant body and pose changes.

Let’s see what it looks like on the phone:

Section 1.2: Understanding the Diffusion Model

The innovative TryOnDiffusion employs a diffusion-based AI model that progressively introduces noise to an image until it is unrecognizable and then systematically reduces that noise to recreate the original image with exceptional quality.

The diffusion model leverages a probabilistic method, effectively reversing a noise addition process. By mastering this transition, the model can generate samples that closely mirror the original data. When the noise introduced is minimal, the process can simplify to conditional Gaussian transitions, allowing for efficient neural network training.

Subsection 1.2.1: Training Steps of the Diffusion Model

During the preprocessing stage, the model utilizes four key images:

- The person's image (with the original clothing removed but retaining their identity).

- A map indicating the person's poses.

- A map displaying the garment's poses.

- The target garment, which is segmented from the original image.

In the first Parallel UNet diffusion, the model processes the person image and the garment image separately, guided by the poses. The second Parallel UNet diffusion then combines these inputs to produce a final output. The final step involves using a super-resolution model to enhance the try-on image from 256x256 pixels to a stunning 1024x1024 resolution.

The first video titled AI Meets Fashion: Google's Virtual Try-On Feature Explained delves into the intricacies of this innovative technology and its implications for online shopping.

Subsection 1.2.2: The Role of Imagen in Super-Resolution

Imagen, a Super-Resolution Diffusion Model, is employed in the final enhancement stage. It excels in transforming text inputs into high-resolution visuals. By utilizing a frozen text encoder and a series of conditional diffusion models, Imagen generates impressive images by systematically increasing their resolution.

The process kicks off by encoding the input text into embeddings that bridge the textual data to the image generation phase. Imagen's capabilities include a methodical upsampling process, enhancing images from 64x64 to 256x256, and finally from 256x256 to a remarkable 1024x1024 resolution.

Chapter 2: The Future of Virtual Try-On Experiences

The virtual try-on feature harnesses the vast potential of Google Shopping Graph, the world's most comprehensive product and seller database.

The second video titled Virtual Clothing Try-On with AI - OOTDiffusion showcases the functionality and user experience of this groundbreaking technology.

With the rise of generative AI, the virtual try-on process has become faster and more scalable. Users now need only a single image of themselves and one of the clothing item to experience this technology.

In conclusion, virtual try-on solutions have evolved significantly, moving away from cumbersome 3D scanning methods. Platforms like Walmart have also introduced similar features, enhancing user experience by allowing them to upload personal images for clothing trials.

credit: Walmart