Navigating Randomness in TensorFlow for Reproducible Results

Written on

Understanding Randomness in Machine Learning

Randomness is an integral aspect of applied machine and deep learning. It serves a crucial function in enhancing the robustness of learning algorithms, which ultimately improves the accuracy of models. This phenomenon is particularly evident in neural networks.

Neural networks utilize randomness to effectively learn the underlying function they aim to approximate, making them inherently stochastic. This implies that even when the same neural network is trained on identical data, it may yield different outcomes upon each execution of the code.

While this stochasticity can enhance model performance, there are scenarios—such as experimentation or educational purposes—where consistent results are crucial. In these instances, ensuring that the code produces the same output repeatedly becomes essential.

In this article, you will discover:

- Various scenarios where randomness is present

- Techniques for achieving reproducible outcomes in TensorFlow

- Possible reasons for persistent randomness

Sources of Randomness

Before addressing solutions, it's crucial to identify where randomness originates. Understanding these sources is key to finding effective solutions.

The first source is the data itself. In machine learning, data can introduce randomness, as training an algorithm multiple times with varying datasets can yield different models. This variability is referred to as "variance."

Additionally, the order in which data samples are presented can also affect results. The arrangement of samples can influence the internal decision-making of algorithms, with some—like neural networks—being more susceptible to this effect.

Sampling techniques, such as k-fold cross-validation or random subsampling for large datasets, also contribute to randomness.

The algorithms themselves can further introduce randomness. Certain architectures incorporate random elements to enhance model quality. For instance, random forests utilize randomness during feature subsampling, while neural networks do so during weight initialization and in other instances.

Managing Randomness in TensorFlow

The best way to grasp the management of randomness in TensorFlow is through practical examples.

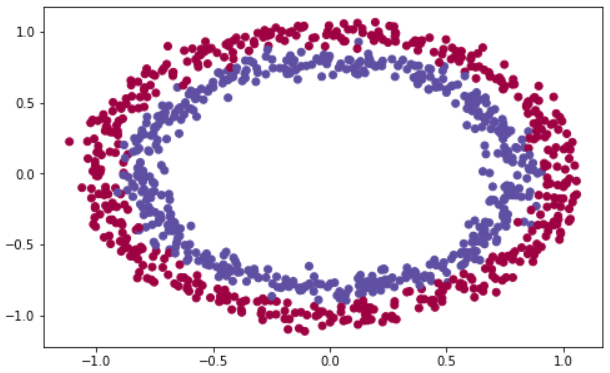

In this section, we will construct a three-layer neural network model designed to classify data points as either 1 or 0. The dataset will be generated using the make_circles function from scikit-learn. Below is the code for data preparation.

Next, we will illustrate the stochastic nature of neural networks by training the model five times on the same dataset and recording the accuracy of each iteration.

The results from the first trial were as follows:

- model_1 accuracy: 0.924

- model_2 accuracy: 0.952

- model_3 accuracy: 0.948

- model_4 accuracy: 0.944

- model_5 accuracy: 0.948

It's important to note that variations in output may occur with each execution of the code.

Setting the Random Seed

One effective approach to mitigate this issue is to establish a fixed seed for the random number generator. This practice guarantees that the same sequence of random numbers is produced each time the code runs.

It's advisable not to fixate on the specific seed value; consistency is what matters. Here’s how the code might change:

When we run this revised code, we observe the following results:

- model_1 accuracy: 0.948

- model_2 accuracy: 0.948

- model_3 accuracy: 0.948

- model_4 accuracy: 0.948

- model_5 accuracy: 0.948

According to the Keras FAQs, the following functions are essential:

- np.random.seed: Sets the initial state for NumPy generated random numbers.

- random.seed: Establishes the initial state for core Python generated random numbers.

- tf.random.set_seed: Initializes the state for TensorFlow backend generated numbers (for further details, consult the documentation).

Persistent Randomness?

If seeding your random number generators doesn’t eliminate randomness, consider using summary statistics after repeating experiments multiple times. For additional insights, refer to “How to Evaluate the Skill of Deep Learning Models.”

These strategies should address most situations, but remember that randomness may still arise from unaccounted factors, such as:

- Third-party frameworks: If your code utilizes external frameworks with distinct random number generators, these too must be seeded.

- GPU usage: The examples discussed assume execution on a CPU. Training on a GPU can introduce variability based on the backend configuration.

In conclusion, randomness is a fundamental characteristic of machine and deep learning that can lead to varying results with each run of the code. When consistent results are required, setting a fixed seed can help ensure reproducibility.

Thank you for reading!

This video titled "Deep Learning Reproducibility with TensorFlow" delves into the essentials of ensuring that your deep learning experiments are reproducible.

In this video titled "Understanding Random Seed in Python," you'll learn how to effectively manage random seeds to achieve consistent results in your programming tasks.